Scene Files

January 5, 2002

This document describes the LWSC version 3 file format for 3D scenes used by LightWave 6.0 and later. At the moment, it's an incomplete rough draft.

- File References

- Item Numbers

- Blocks

- Envelopes

- Motion Data

- Clips

- Textures

- File Structure

- Scene

- Objects

- Global Illumination

- Lights

- Cameras

- Effects

If you've worked with version 1 of the format (version 2 was an unreleased interim format), version 3 will seem quite familiar. Scene files are still text files containing keyword-value pairs. The most important difference is in the way keyframe data is stored, but obviously there are many others comprising features not available in LightWave prior to 6.0.

The first half of this document treats data units that can occur in scene files in a number of different contexts (envelopes and textures, for example). Most of these data units are stored on multiple lines. To make it easier to distinguish keywords from values, and to make the block structure of these multiline data units more apparent, keywords are shown in boldface type.

The data units in the second half are analogous to Layout commands (and in fact are

treated in a similar way during scene loading). These are listed roughly in the order they

typically occur in the scene file. The data types of the values (or arguments) are denoted

by the initial letter, in bold. (The same letters are used in C printf

formatting.)

- number

- An integer.

- gfloat

- A floating-point number.

- string

- A string, such as a filename or channel name.

- hex

- A hexadecimal number, such as an item identifier.

File References

Scene files don't contain the binary data defining objects, images or plug-ins. These are included in the scene by reference, using filenames and server names.

Filenames are written in a platform-neutral format. For absolute (fully qualified)

paths, the first node represents a disk or similar storage device, and its name is

separated from the rest of the path by a colon. Other nodes in the path are separated by

forward slashes. disk:path/file is an absolute path, and path/subpath/file

is a relative path. Relative paths are relative to the current content directory,

a path set by the user and not explicitly written in the scene file. Relative paths allow

the scene and the files it references to be moved easily to different file systems.

Plug-ins used by the scene are referenced by server name, the string that appears in

the name field of the ServerRecord that

every plug-in contains. Plug-ins can write their own data in scene files, in any format

they like. This data almost always immediately follows the plug-in reference in the file,

and it belongs to the plug-in. Its internals aren't part of the scene file format.

Item Numbers

When a scene file needs to refer to specific items to establish item relationships (parenting, for example), it uses item numbers. Items are numbered in the order in which they appear in the file, starting with 0.

Item numbers can be written in one of two ways, depending on which keyword they're used

with. In general, if the type of the item (object, bone, light, camera) can be determined

from the keyword alone, the item number will simply be the ordinal, written as a decimal

integer. When the keyword can be used with items of more than one type, the item number is

an unsigned integer written as an 8-digit hexadecimal string, the format produced by the

C-language "%8X" print format specifier, and the high bits identify the

item type.

The first hex digit (most significant 4 bits) of the hex item number string identifies the item type.

1 - Object

2 - Light

3 - Camera

4 - Bone

The other digits make up the item number, except in the case of bones. For bones, the next 3 digits (bits 16-27) are the bone number and the last 4 digits (bits 0-15) are the object number for the object the bone belongs to. Some examples:

10000000- the first object

20000000- the first light

4024000A- the 37th bone (24 hex) in the 11th object (0A hex)

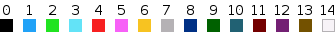

Visibility and Color

In Layout's interface, users can set the visibility of each item individually, and when items are displayed as wireframes, they can choose the wireframe color from a table. For items other than objects, visibility is either on (1) or off (0), but for objects, the state can be any of the following.

0 - hidden

1 - bounding box

2 - vertices only

3 - wireframe

4 - front face wireframe

5 - shaded solid

6 - textured shaded solid

The available colors and their indexes are

(Astute readers comparing this color table to the one for the SDK's ItemColor command will note that the light and dark color

indexes are interchanged in the two tables.)

The default visibility and color are stored in the LightWave configuration file. Unless this has been altered, objects are drawn as textured shaded solids (6) and their wireframe color is cyan (3). Lights are magenta (5), cameras are green (2), and bones are dark blue (9).

Blocks

Information in a scene file is organized into blocks, the ASCII text analog of the chunks described in the IFF specification. Each block consists of an identifier or name followed by some data. The format of the data is determined by the block name. Block names resemble C-style identifiers. In particular, case is significant, and they don't contain spaces or most other non-alphanumeric characters.

A single-line block is delimited by the newline that terminates the line. Multiline blocks are delimited by curly braces (the { and } characters, ASCII codes 123 and 125). The name of a multiline block follows the opening curly brace on the same line. The curly brace and the name are separated by a single space. The data follows on one or more subsequent lines. Each line of data is indented using two spaces. The closing brace is on a line by itself and is not indented.

Individual data elements are separated from each other by a single space. String data elements are enclosed in double quotes and may contain spaces.

Blocks can be nested. In other words, the data of a block can include other blocks. A block that contains nested blocks is always a multiline block. At each nesting level, the indention of the data is incremented by two additional spaces.

SingleLineBlock data

{ MultiLineBlock

data

{ NestedMultiLineBlock

data

}

}

Envelopes

An envelope defines a function of time. For any animation time, an envelope's parameters can be combined to generate a value at that time. Envelopes are used to store position coordinates, rotation angles, scale factors, camera zoom, light intensity, texture parameters, and anything else that can vary over time.

The envelope function is a piecewise polynomial curve. The function is tabulated at specific points, called keys. The curve segment between two adjacent keys is called a span, and values on the span are calculated by interpolating between the keys. The interpolation can be linear, cubic, or stepped, and it can be different for each span. The value of the function before the first key and after the last key is calculated by extrapolation.

In scene files, an envelope is stored in a block named Envelope that contains

one or more nested Key blocks and one Behaviors block.

{ Envelope

nkeys

Key value time spantype p1 p2 p3 p4 p5 p6

Key ...

Behaviors pre post

{ ChannelHandler

server-name

flags

server-data

}

}

The nkeys value is an integer, the number of Key blocks in the

envelope. Envelopes must contain at least one Key block. The contents of a Key

block are as follows.

value- The key value, a floating-point number. The units and limits of the value depend on what parameter the envelope represents.

time- The time in seconds, a float. This can be negative, zero or positive. Keys are listed in the envelope in increasing time order.

spantype- The curve type, an integer. This determines the kind of interpolation that will be

performed on the span between this key and the previous key, and also indicates what

interpolation parameters are stored for the key.

0 - TCB (Kochanek-Bartels)

1 - Hermite

2 - 1D Bezier (obsolete, equivalent to Hermite)

3 - Linear

4 - Stepped

5 - 2D Bezier p1...p6- Curve parameters. The data depends on the span type.

- TCB, Hermite, 1D Bezier

- The first three parameters are tension, continuity and bias. The fourth and fifth parameters are the incoming and outgoing tangents. The sixth parameter is ignored and should be 0. Use the first three to evaluate TCB spans, and the other two to evaluate Hermite spans.

- 2D Bezier

- The first two parameters are the incoming time and value, and the second two are the outgoing time and value.

The Behaviors block contains two integers.

pre, post- Pre- and post-behaviors. These determine how the envelope is extrapolated at times

before the first key and after the last one.

- 0 - Reset

- Sets the value to 0.0.

- 1 - Constant

- Sets the value to the value at the nearest key.

- 2 - Repeat

- Repeats the interval between the first and last keys (the primary interval).

- 3 - Oscillate

- Like Repeat, but alternating copies of the primary interval are time-reversed.

- 4 - Offset Repeat

- Like Repeat, but offset by the difference between the values of the first and last keys.

- 5 - Linear

- Linearly extrapolates the value based on the tangent at the nearest key.

The Behaviors block can optionally be followed by one or more ChannelHandler

blocks. Each of these contains the name of the plug-in and its private data.

The source code in the sample/envelope directory of the LightWave plug-in SDK demonstrates how envelopes are evaluated.

The presence of an envelope can be signified in several different ways. The Channel

block within motion data, described in the next section, is always followed by the

envelope for that channel. If a parameter value is listed in a single-line block, the

value of the parameter may be written as the string "(envelope)". And

in multiline blocks, one of the lines may be an envelope count, which will be followed by

one or more envelopes if the count is non-zero.

This document will use "[e]" to indicate the possible presence of an

envelope for all of these cases.

Motion Data

Item motion data is stored as a set of envelopes. Each envelope is preceeded by a Channel

block identifying the motion channel, and the channels are preceeded by a single NumChannels

block containing the number of envelopes.

NumChannels 9 Channel 0 [e] Channel 1 [e] ...

The Channel indexes are

0, 1, 2 - (x, y, z) position

3, 4, 5 - (heading, pitch, bank) rotation

6, 7, 8 - (sx, sy, sz) scale factors along each axis

NumChannels is usually 6 for cameras (since scaling cameras isn't meaningful)

and 9 for other item types. If the item has a parent, the values in the envelopes are

relative to the parent.

LightWave coordinates are left-handed, with Y up. Heading is rotation around Y, pitch is rotation around X, and bank is rotation around Z. All rotations are positive clockwise when viewed from the positive side of the rotation axis. Given matrices P, H and B representing rotation around each axis,

| 1 | 0 | 0 | |

| P = | 0 | cos p | sin p |

| 0 | -sin p | cos p | |

| cos h | 0 | -sin h | |

| H = | 0 | 1 | 0 |

| sin h | 0 | cos h | |

| cos b | sin b | 0 | |

| B = | -sin b | cos b | 0 |

| 0 | 0 | 1 |

the transformation matrix M,

M = B P H

combines the rotations. To get the rotated point (x', y', z') from (x, y, z),

x' = M00 x + M10 y + M20 z

y' = M01 x + M11 y + M21 z

z' = M02 x + M12 y + M22 z

or simply

p' = M p

where column vector p = (x, y, z). The rows of M are the +X, +Y, +Z direction vectors for the item in world coordinates.

Clips

Still images, image sequences and animation files are collectively called clips. Clips

are used in textures and as background plates and spotlight filters, among other things.

Clip references in scene files are stored in Clip blocks. The first block in a

Clip is the source block containing information specific to the kind of clip it is, and

following this are blocks for parameters that all clip types can share.

- Still Image

{ Clip { Still filename }The source is a single still image referenced by a filename in platform-neutral format.

- Image Sequence

{ Clip { Sequence ndigits flags offset reserved start end file-prefix file-suffix }The source is a numbered sequence of still image files. Each filename contains a fixed number (

ndigits) of decimal digits that specify a frame number, along with a prefix (the part before the frame number, which includes the path) and a suffix (the part after the number, typically a PC-style extension that identifies the file format). The prefix and suffix are the same for all files in the sequence.The flags include bits for looping and interlace. The offset is added to the current frame number to obtain the digits of the filename for the current frame. The start and end values define the range of frames in the sequence.

- Animation

{ Clip { Animation filename server-name flags server-data }The source is a file loaded by an animation loader plug-in, typically an AVI, QuickTime or MPEG file. The

server-nameis the name of the loader plug-in. Any data afterflagsin theAnimationblock belongs to the plug-in.- Reference (Clone)

{ Clip { Reference index id-string "Reference" name filename }A reference clip is a clone of one of the other clip types. It shares the identifying information in the original clip but may have different image processing blocks following the type header.

The

id-stringis a machine-generated string that uniquely identifies the clone. Thenameis the scene name of the original clip as it appears to the user. For stills and animation files, it's the filename without the path. For image sequences, it's the filename prefix, without the path, followed by(sequence). The filename is the name of the file for stills and animations and the name of the first file in the sequence for sequences.The index refers to the clip's position in LightWave's internal image list at the time the scene was saved. This isn't particularly meaningful in scene files.

Blocks after the source block contain image processing parameters. Most of these

include an enveloped value indicating the number of Envelope blocks that

immediately follow it. If the parameter is enveloped, its static value is ignored during

rendering, but it remains the value that will be assigned to the parameter if the envelope

is removed.

- Time

Times start duration rate r1 r2

Defines source times for an animated clip.

- Contrast

{ Contrast delta [e]RGB levels are altered in proportion to their distance from 0.5. Positive deltas move the levels toward one of the extremes (0.0 or 1.0), while negative deltas move them toward 0.5. The default is 0.

- Brightness

{ Brightness delta [e]The delta is added to the RGB levels. The default is 0.

- Saturation

{ Saturation delta [e]The saturation of an RGB color is defined as

(max - min)/max, wheremaxandminare the maximum and minimum of the three RGB levels. This is a measure of the intensity or purity of a color. Positive deltas turn up the saturation by increasing themaxcomponent and decreasing theminone, and negative deltas have the opposite effect. The default is 0.- Hue

{ Hue delta [e]The hue of an RGB color is an angle defined as

r is max: 1/3 (g - b)/(r - min)

g is max: 1/3 (b - r)/(g - min) + 1/3

b is max: 1/3 (r - g)/(b - min) + 2/3with values shifted into the [0, 1] interval when necessary. The levels between 0 and 1 correspond to angles between 0 and 360 degrees. The hue delta rotates the hue. The default is 0.

- Gamma Correction

{ Gamma value [e]Gamma correction alters the distribution of light and dark in an image by raising the RGB levels to a small power. By convention, the gamma is stored as the inverse of this power. A gamma of 0.0 forces all RGB levels to 0.0. The default is 1.0.

- Negative

Negative on-off

If non-zero, the RGB values are inverted, (1.0 - r, 1.0 - g, 1.0 - b), to form a negative of the image.

- Plug-in Image Filters

{ ImageFilterHandler server-name flags server-dataPlug-in image filters can be used to pre-filter an image before rendering. The filter has to be able to exist outside of the special environment of rendering in order to work here (it can't depend on functions or data that are only available during rendering). Filters are given by a server name, an enable flag, and optional data that belong to the plug-in.

- Plug-in Pixel Filters

{ PixelFilterHandler server-name flags server-dataPixel filters may also be used as clip modifiers, and they are stored and used in a way that is exactly like image filters.

Textures

Mathematically, a texture is simply a function of three variables, usually the x, y and z coordinates of a point in space. Conceptually, a texture is an image mapping, using prerendered images, 2D or 3D images generated procedurally, or some combination. Scene files record textures used for clip mapping, displacement mapping and volumetric lights. Textures are also used extensively to modulate surface parameters, but surfaces are stored in object files.

A texture comprises one or more texture layers. Each layer can be an image map, a procedural, or a gradient, and each has its own mapping between texture and world coordinates. Layers are combined using a blending method set for each layer.

In scene files, a texture is stored in a block named TextureBlock that

contains one or more nested Texture blocks representing each layer. A Texture

block contains a header block, with parameters common to all layer types, followed by a

list of attributes or parameters. The name of the header block identifies the layer type

and can be ImageMap, Procedural or Gradient. For image maps and

procedurals, the trailing attribute list includes a mapping block describing the

projection. In outline form,

- texture

- layer 1

- header

- ordinal string

- channel

- enable flag

- opacity...

- texture mapping

- center

- size...

- other attributes...

- header

- layer 2...

- layer 1

A simple texture containing a single image map layer would look like this.

{ TextureBlock

{ Texture

{ ImageMap

"(-128)"

Channel 1954051186

{ Opacity

0

1

0

}

Enable 1

Negative 0

}

{ TextureMap

{ Center

0 0 0

0

}

{ Size

1 1 1

0

}

{ Rotation

0 0 0

0

}

{ Falloff

0

0 0 0

0

}

RefObject "(none)"

Coordinates 0

}

Projection 0

Axis 2

{ Image

{ Clip

{ Still

"Images/gray.jpg"

}

}

}

WrapOptions 1 1

{ WidthWrap

1

0

}

{ HeightWrap

1

0

}

AntiAliasing 1 1

PixelBlending 1

}

}

As you read through this, it helps to keep the heirarchical structure firmly in mind.

The texture (TextureBlock) contains one layer (Texture), and this is

stored in three parts: the header (ImageMap), the mapping (TextureMap)

and the attribute list (Projection, Axis, WrapOptions, Antialiasing

and PixelBlending).

- Texture Header

{ layer-type ordinal Channel 1954051186 { Opacity type value [e] } Enable on-off Negative on-off }The layer type can be

ImageMap,ProceduralorGradient. The number after theChannelkeyword is just the four characters 'txtr' written as an unsigned integer in big-endian byte order. It isn't particularly meaningful. The opacity value is used to blend this layer with the others. IfEnableis 0, the layer won't participate in the calculation of the texture value.The ordinal determines this layer's place in the order of evaluation of the layers. Internally, the ordinal is an arbitrary-length byte string, with each byte representing a digit in a base-255 fraction. Ordinals are compared using the standard C runtime function

strcmp. In scene files, each byte is written as a signed 8-bit integer, and bytes are delimited by parentheses.To understand how LightWave uses these, imagine that instead of strings, it used floating-point fractions as the ordinals. Whenever LightWave needed to insert a new layer between two existing layers, it would find the new ordinal for the inserted layer as the average of the other two, so that a layer inserted between ordinals 0.5 and 0.6 would have an ordinal of 0.55.

But floating-point ordinals would limit the number of insertions to the (fixed) number of bits used to represent the mantissa. Ordinal strings are infinite-precision fractions written in base 255, using the ASCII values 1 to 255 as the digits (0 isn't used, since it's the special character that marks the end of the string).

Ordinals can't end on a 1, since that would prevent arbitrary insertion of other layers. A trailing 1 in this system is like a trailing 0 in decimal, which can lead to situations like this,

0.5 "\x80" 0.50 "\x80\x01"

where there's no daylight between the two ordinals for inserting another layer.

- Texture Mapping

{ TextureMap { Center x y z [e] } { Size x y z [e] } { Rotation heading pitch bank [e] } { Falloff type x y z [e] } RefObject object-name Coordinates coord-system }The texture mapping block describes the mapping from object or world space to texture space. The defaults for the center and rotation components are 0, and for size they're 1.0.

Texture effects may fall off with distance from the texture center. The (

x y z) falloff vector represents a rate per unit distance along each axis. The type can be- 0 - Cubic

- Falloff is linear along all three axes independently.

- 1 - Spherical

- Falloff is proportional to the Euclidean distance from the center.

- 2 - Linear X

3 - Linear Y

4 - Linear Z - Falloff is linear only along the specified axis. The other two vector components are ignored.

RefObjectspecifies a reference object for the texture. The reference object is given by name, and the scene position, rotation and scale of the object are combined with the layer's mapping parameters to compute the texture mapping. If the object name is "(none)" or theRefObjectblock is missing, no reference object is used.The coordinate system can be 0 for object coordinates (the default if the block is missing) or 1 for world coordinates.

Parameters specific to each layer type are listed in blocks after the header and mapping blocks.

Image Maps

Texture blocks with a header type of ImageMap are image mapped

layers.

- Projection Mode

Projection mode

The projection defines how 2D coordinates in the image are transformed into 3D coordinates in the scene. In the following list of projections, image coordinates are called r (horizontal) and s (vertical).

- 0 - Planar

- The image is projected on a plane along the major axis (specified in the

Axisblock). r and s map to the other two axes. - 1 - Cylindrical

- The image is wrapped cylindrically around the major axis. r maps to longitude (angle around the major axis).

- 2 - Spherical

- The image is wrapped spherically around the major axis. r and s map to longitude and latitude.

- 3 - Cubic

- Like Planar, but projected along all three axes. The dominant axis of the geometric normal selects the projection axis for a given surface spot.

- 4 - Front Projection

- The image is projected on the current camera's viewplane. r and s map to points on the viewplane.

- 5 - UV

- r and s map to points (u, v) defined for the

geometry using a vertex map (identified in the

VertexMapblock).

- Major Axis

Axis axis

The major axis used for planar, cylindrical and spherical projections. The value is 0, 1 or 2 for the X, Y or Z axis.

- Image Map

{ Image { Clip ...The

Imageblock contains aClipblock.- Image Wrap Options

WrapOptions wopt hopt

Specifies how the color of the texture is derived for areas outside the image.

- 0 - Reset

- Areas outside the image are assumed to be black. The ultimate effect of this depends on the opacity settings. For an additive texture layer on the color channel, the final color will come from the preceding layers or from the base color of the surface.

- 1 - Repeat

- The image is repeated or tiled.

- 2 - Mirror

- Like repeat, but alternate tiles are mirror-reversed.

- 3 - Edge

- The color is taken from the image's nearest edge pixel.

If no wrap options are specified, 1 is assumed.

- Image Wrap Amount

{ WidthWrap cycles [e] } { HeightWrap cycles [e] }For cylindrical and spherical projections, these parameters control how many times the image repeats over each full interval.

- UV Vertex Map

VertexMap vmapname

For UV projection, which depends on texture coordinates at each vertex, this selects the name of the

TXUVvertex map that contains those coordinates. (Since vertex maps associate values with object vertices, they're stored in object files.)- Antialiasing

Antialiasing enabled strength

The texture antialiasing strength is proportional to the width of the sample filter, so larger values sample a larger area of the image. Texture antialiasing is enabled by default, and the default strength is 1.0.

- Pixel Blending

PixelBlending enabled

Pixel blending enlarges the sample filter when it would otherwise be smaller than a single image map pixel. This blurs the pixelated appearance of textures that are too close to the camera.

- Sticky Projection

StickyProjection enabled time

The "sticky" or fixed projection time for front projection image maps. When on, front projections will be fixed at the given time.

- Texture Amplitude

{ TextureAmplitude amplitude [e]Appears in image texture layers used for bump mapping. Texture amplitude scales the bump height derived from the pixel values. The default is 1.0.

Procedural Textures

Texture blocks of type Procedural are procedural textures that modulate a

value algorithmically.

- Axis

Axis axis

If the procedural has an axis, it may be defined with this chunk using a value of 0, 1 or 2.

- Basic Value

TextureValue value

Procedurals are often modulations between the current channel value and another value, given here. This may be a scalar or a vector.

- Algorithm and Parameters

{ Func algorithm-name dataThe

Funcblock names the procedural and stores its parameters. The name will often map to a plug-in name. The variable-length data following the name belongs to the procedural.

Gradient Textures

Texture blocks of type Gradient are gradient textures that modify a value by

mapping an input parameter through an arbitrary transfer function. Gradients are

represented to the user as a line containing keys. Each key is a color, and the gradient

function is an interpolation of the keys in RGB space. The input parameter selects a point

on the line, and the output of the texture is the value of the gradient at that point.

- Parameter Name

ParamName param-name

The input parameter. Possible values include

"Previous Layer"

"Bump"

"Slope"

"Incidence Angle"

"Light Incidence"

"Distance to Camera"

"Distance to Object"

"X Distance to Object"

"Y Distance to Object"

"Z Distance to Object"

"Weight Map"- Item Name

ItemName item-name

The name of a scene item. This is used when the input parameter is derived from a property of an item in the scene.

- Gradient Range

GradStart start-frame GradEnd end-frame

The start and end of the input range. These values only affect the display of the gradient in the user interface. They don't affect rendering.

- Repeat Mode

GradRepeat repeat-mode

The repeat mode. This is currently undefined and set to 0 by default.

- Key Values

KeyFloats input r g b a (input r g b a...)

The transfer function is defined by an array of keys, each with an input value and an RGBA output vector. Given an input value, the gradient can be evaluated by selecting the keys whose positions bracket the value and interpolating between their outputs. If the input value is lower than the first key or higher than the last key, the gradient value is the value of the closest key.

- Key Parameters

KeyInts interpolation (interpolation...)

An array of integers defining the interpolation for the span preceding each key. Possible values include

0 - Linear

1 - Spline

2 - Step

File Structure

.

Scene

FirstFrame nfirst

LastFrame nlast

FrameStep nstep- The frame range and step size for rendering. In the simplest case, the first frame and frame step are 1, and the last frame is the number of frames to be rendered.

PreviewFirstFrame nfirst

PreviewLastFrame nlast

PreviewFrameStep nstep- The frame range and step size for previewing. These may be unrelated to the values for rendering. They also control the visible ranges of certain interface elements, for example the frame slider in the main window.

CurrentFrame nframe- The frame displayed in the interface when the scene is loaded.

FramesPerSecond gframes- This controls the duration of each frame.

Objects

LoadObjectLayer nlayer sfilename

ShowObject nvisibility ncolor- Begins a group of statements about an object. The layer number is the index recorded in the LAYR chunk of the object file.

ObjectMotion- The object's motion data follows this line.

UseBonesFrom nindex- Use the bones associated with the object given by the index.

Plugin sclass nlistpos sname

EndPlugin- Lists an object plug-in. Classes include CustomObjHandler,

DisplacementHandler, ItemMotionHandler, and ObjReplacementHandler.

The

listposis a 1-based index into the class-specific plug-in list for the object. The list order generally determines the evaluation order. Thenameis the plug-in's server name. Any number of lines can occur betweenPluginandEndPlugin. These contain data that belongs to the plug-in. MorphAmount gamount [e]

MorphTarget nindex

MorphSurfaces non-off

MTSEMorphing non-off- Morphing displaces vertices by interpolating between their positions in source and

target objects. The amount normally varies between 0.0 and 1.0, and it may be enveloped.

The target is identified by object index. If MorphSurfaces is on, the source and target

surface colors are blended.

MTSE is an acronym for Multiple Target Single Envelope. If this is turned on, the morph amount envelope of the base object (the first source) can control morphing between a chain of targets. Amounts between 0.0 and 1.0 morph between the base and its target, amounts between 1.0 and 2.0 morph between the first target and its target, and so on. Surface morphing ignores MTSE.

DisplacementMaps [t]

ClipMaps [t]- Displacement maps deform the object by displacing its vertices. Clip maps are used to stencil out, or clip, portions of the object's geometry.

ObjectDissolve gamount [e]- Dissolve makes the object transparent, and 100% dissolve (an

amountof 1.0) removes it from rendering calculations altogether. This effect is distinct from surface transparency. DistanceDissolve non-off

MaxDissolveDistance gdistance- The dissolve amount can be a function of distance, in which case the

ObjectDissolveamount is ignored. The max distance is the distance at which the dissolve will be 1.0. ParticleSize gsize [e]- .

LineThickness gpixels

ParticleThickness gpoints glines- .

AffectedByFog gamount- Each object can be affected differently by the global fog setting. The default amount is 1.0.

UnseenByRays non-off- If this is on, the object will not participate in reflection, refraction or radiosity raytracing. (It may still cast raytraced shadows.)

UnseenByCamera non-off- Turning this on is equivalent to fully dissolving the object. It won't participate in rendering, but it can still be seen and manipulated in preview windows.

ShadowOptions nflags- Shadow option bit flags, combined using bitwise-or.

1 - self shadow

2 - cast shadow

4 - receive shadow ExcludeLight xlight- Excludes the specified light from shading and shadow calculations for the object. The light ID can include special values used to indicate radiosity (21000000) and caustics (22000000).

PolygonEdgeFlags nflags- Edge option bit flags, combined using bitwise-or. These flags control the rendering of

different types of edges.

- 1 - Silhouette Edges

- Drawn at the border between the object and its background.

- 2 - Unshared Edges

- Drawn where two surfaces meet, but the polygons don't share an edge.

- 4 - Sharp Creases

- Drawn at abrupt changes in surface normal.

- 8 - Surface Borders

- Drawn at the shared edge of two polygons with different surfaces.

- 16 - Other Edges

- Drawn at polygon edges not meeting one of the other criteria.

PolygonEdgeThickness gsilhouette gunshared gsharp gsurface gother- The pixel thickness of each edge type. The default is 1.0.

PolygonEdgeColor gred ggreen gblue- The color of all edges.

PolygonEdgesZScale gscale- LightWave slightly scales the z-depth of edge pixels so that they are always in front of (or on top of) the polygons they belong to. The default scale factor is 0.998.

EdgeNominalDistance gdistance- If this is present, the edge thickness will vary inversely with distance. The

distancevalue is the distance at which the thickness will be equal to thePolygonEdgeThickness.

Global Illumination

.

AmbientColor gred ggreen gblue

AmbientIntensity gintensity [e]- Color and intensity of the ambient light.

EnableRadiosity non-off

RadiosityType ntype

RadiosityIntensity gintensity [e]- The default intensity is 1.0. The type is one of the following.

0 - Backdrop Only

1 - Monte Carlo

2 - Interpolated RadiosityTolerance gtolerance

RadiosityRays nrays

RadiosityMinSpacing gdistance- These control radiosity sampling. The renderer typically doesn't fire radiosity rays for

every pixel in the image. Nearby spots with similar surface normals can be assigned an

incident radiosity level by interpolation. Tolerance is an arbitrary factor controlling

the frequency of full radiosity evaluations. It ranges between 0 and 1, with a default of

0.3. A value of 0 forces full evaluation. For non-zero tolerances, the minimum spacing

forces interpolation in small areas with frequent large changes in surface normal. The

default is 0.02 meters. The maximum spacing is 100 times the minimum.

Currently the number of rays must be equal to 3n2 for an integer n between 1 and 16. Rays are fired in a hemispherical sampling pattern with n latitudes and 3n longitudes, and the number of rays is presented to the user in the form "n x 3n."

EnableCaustics non-off

CausticIntensity gintensity [e]

CausticAccuracy nrays

CausticSoftness nsoftness- The number of rays fired for caustics can range from 0 to 10000. The default is 100, and the default softness is 20.

Lights

.

AddLight

LightName sname

ShowLight nvisibility ncolor- These begin a group of statements about a light.

LightMotion- The light's motion data follows this line.

LightColor gred ggreen gblue [e]

LightIntensity gintensity [e]- The color and intensity of the light.

AffectCaustics non-off- Controls whether the light participates in caustics calculations.

LightType ntype- The type can be

0 - Distant

1 - Point

2 - Spot

3 - Linear

4 - Area LightFalloffType ntype

LightRange grange- The falloff type and range (or nominal distance). For each type, the intensity falloff

is proportional to the listed expression for a given range r and actual distance d.

0 - No falloff

1 - Linear: (r - d) / r, for d < r

2 - Inverse: r / d

3 - Inverse Squared: (r / d)2 LightConeAngle gangle [e]

LightEdgeAngle gangle [e]- The cone and soft edge angles for spotlights.

VolumetricLighting non-off { Volumetrics { Options Flags n n n n n n n Sprite non-off nquality Dissolve gdissolve [e] Shadow gamount [e] Radius gradius [e] Base gdistance [e] Height gheight [e] Luminosity glevel [e] Opacity glevel [e] Attenuation glevel [e] RedShift gamount [e] Density gdensity [e] Color gred ggreen gblue [e] } [t]- Volumetric settings for the light. The first two flags are reserved, but following them

in order are booleans for the following features.

Opacity Casts Shadows

Fade In Fog

Specify Medium Color (use the color of the medium given inColor)

Enable Texture

Texture OnlyWhen Sprite mode is off, the quality is a number between 0 (low) and 4.

LensFlare non-off- .

FlareIntensity gintensity- .

FlareNominalDistance gdistance- .

FlareDissolve gdissolve [e]- .

LensFlareFade nflags- .

LensFlareOptions nflags- .

FlareRingColor gred ggreen gblue- .

FlareRingSize gsize- .

FlareDistortFactor gdistort- .

FlareStarFilter ntype- .

FlareRotationAngle gangle- .

FlareRandStreakInt gintensity- .

FlareRandStreakDens gdensity- .

FlareRandStreakSharp gsharpness- .

ShadowType ntype- .

ShadowColor gred ggreen gblue- .

CacheShadowMap non-off- .

ShadowMapSize nsize- .

UseConeAngle non-off- .

ShadowMapAngle gangle [e]- .

ShadowFuzziness gfuzz- .

Cameras

.

AddCamera

CameraName sname

ShowCamera nvisibility ncolor- .

CameraMotion

NumChannels nchannels

Channel nindex- .

ZoomFactor (envelope)- .

ResolutionMultiplier gfactor

FrameSize nwidth nheight- .

PixelAspect gaspect- .

MaskPosition nx ny nwidth nheight- .

MotionBlur ntype- .

FieldRendering non-off

ReverseFields non-off- .

BlurLength gframes (envelope)- .

LimitedRegion non-off

RegionLimits gx0 gx1 gy0 gy1- .

ApertureHeight gheight- .

Antialiasing ntype

EnhancedAA non-off- .

FilterType ntype- .

AdaptiveSampling non-off

AdaptiveThreshold gthreshold- .

Effects

.

BGImage

FGImage

FGAlphaImage (clip)- .

FGDissolve gdissolve (envelope)- .

FGFaderAlphaMode non-off- .

ForegroundKey non-off- .

LowClipColor gred ggreen gblue

HighClipColor gred ggreen gblue- .

SolidBackdrop non-off

BackdropColor gred ggreen gblue- .

ZenithColor gred ggreen gblue

SkyColor gred ggreen gblue

GroundColor gred ggreen gblue

NadirColor gred ggreen gblue- .

Plugin sclass nlistpos sserver

EndPlugin- .

FogType ntype- .

FogMinDistance gdistance

FogMaxDistance gdistance- .

FogMinAmount gamount

FogMaxAmount gamount- .

FogColor gred ggreen gblue- .

BackdropFog non-off- .

LimitDynamicRange non-off- .

DitherIntensity ndither- .

AnimatedDither non-off- .

Saturation glevel (envelope)- .

GlowEffect non-off- .

GlowIntensity gintensity [e]- .

GlowRadius gradius [e]- pixels.

Rendering and Interface

.

RenderMode nmode- .

RayTraceEffects nflags- .

DataOverlayLabel slabel- .

ViewConfiguration nview- .

DefineView nview- .

ViewType ntype- .

ViewAimpoint gx gy gz- .

ViewRotation gheading gpitch gbank- .

ViewZoomFactor gzoom- .

GridNumber nnumber- .

GridSize gsize- .

CameraViewBG non-off- .

ShowMotionPath non-off

ShowFogRadius non-off

ShowFogEffect non-off

ShowSafeAreas non-off

ShowFieldChart non-off- .

CurrentLight nindex

CurrentCamera nindex- .